Heuristic search is a decision-time planning algorithm that computes values for possible future states whenever we need to decide on an action.

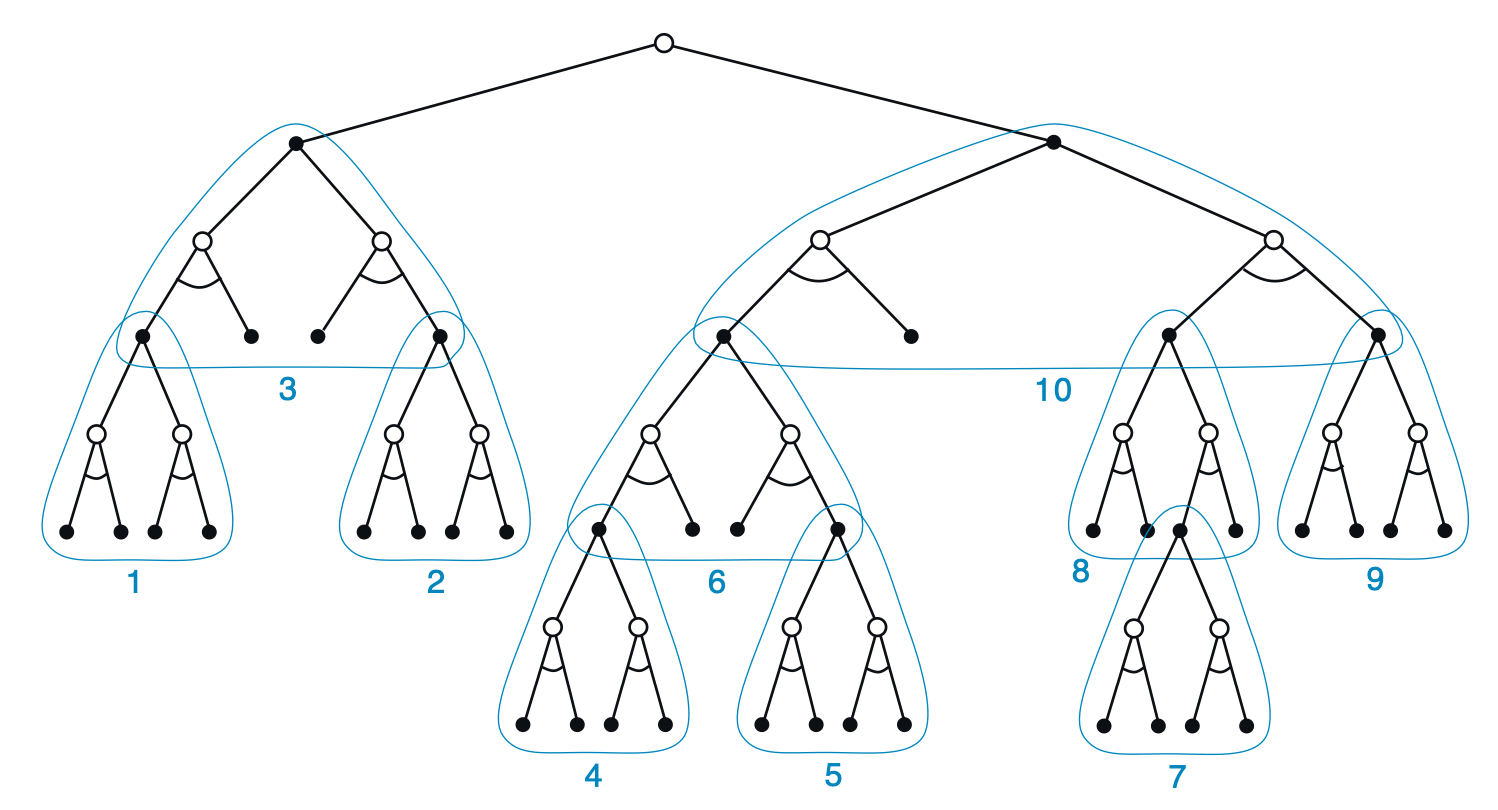

Specifically, we consider the tree of possible continuations (by traversing the environment dynamics

After computing the values and backing it up to our current state, we greedily pick the max-value action. Note that this procedure is similar to a greedy policy but instead of looking one step into the future, we go much deeper into the possible future states.

Focused Depth

The core advantage of heuristic search is that it focuses updates on the current state, thus applying our limited compute to the most relevant states for our immediate decision. Going deeper than one step allows us to gather more accurate estimates as well since we’re using the environment dynamics and actual rewards rather than any other approximation method.