Theory

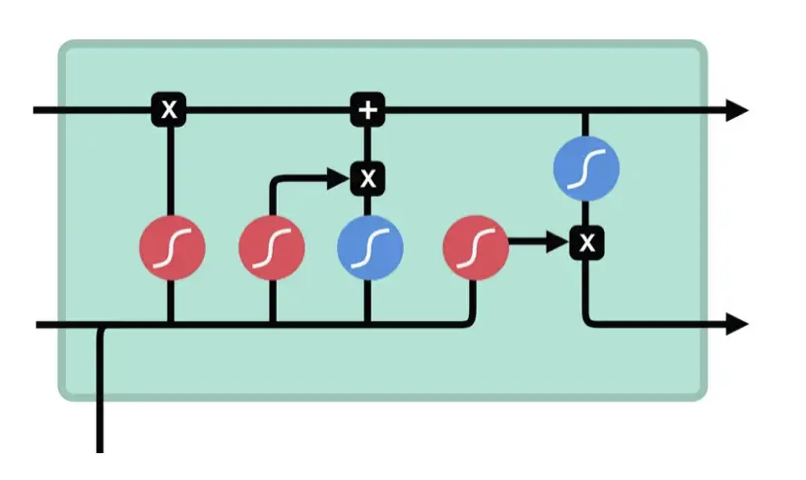

LSTM improves on the standard 💬 Recurrent Neural Network by keeping two separate recurrence tracks for long-term and short-term memory. This avoids the problems that RNNs face with failing to retrieve information that occurred early in the sequence.

The short-term information

- Forget gate uses

and to choose parts of to “forget,” or set to zero. - Input gate again uses

and to choose parts of to update, forming . - Output gate uses

and to select parts of an activated to output as .

Model

The model structure is depicted below.

Note that sigmoids (in red) are used for selection since they’re bounded from