Theory

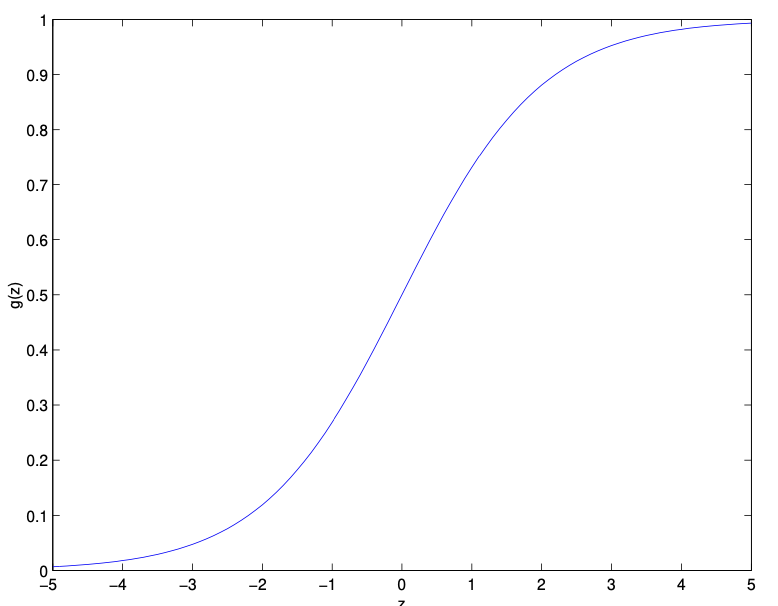

Logistic regression uses a similar idea as 🏦 Linear Regression but transforms the output to probabilities in range

We’ll apply weights

The equation above enforces linear decision boundary at

is linear on

Now, the likelihood of our data can be calculated as a product,

It’s easier to optimize the log likelihood

With simple logistic regression, this is a concave down function with global optimum, which can be optimized via gradient ascent; this is also equivalent to minimizing the negation, which can be seen as optimizing logistic loss.

Note

We can also view our loss as a 💧 Cross Entropy loss, between the true one-hot encoded labels and probabilities generated by our model.

We can generalize logistic regression to softmax regression, which classifies multiple classes. The probability of each class is the ratio of its probability with respect to all classes, mathematically computed as

Model

Like linear regression, our model consists of weights

For multi-class classification, we’ll calculate individual probabilities for each class. With

Training

Given training data

- Randomly initialize weights

. - Repeatedly perform gradient ascent steps; gradient is calculated as

Note that for MLE, we drop the regularization term

Prediction

Given input

If it’s above threshold