Accuracy

Accuracy is the main symmetric classification measure.

Precision and Recall

Asymmetric measures include precision, recall (sensitivity or true positive rate), and specificity (inverse of false positive rate).

F1 Score

F1 score combines precision and recall.

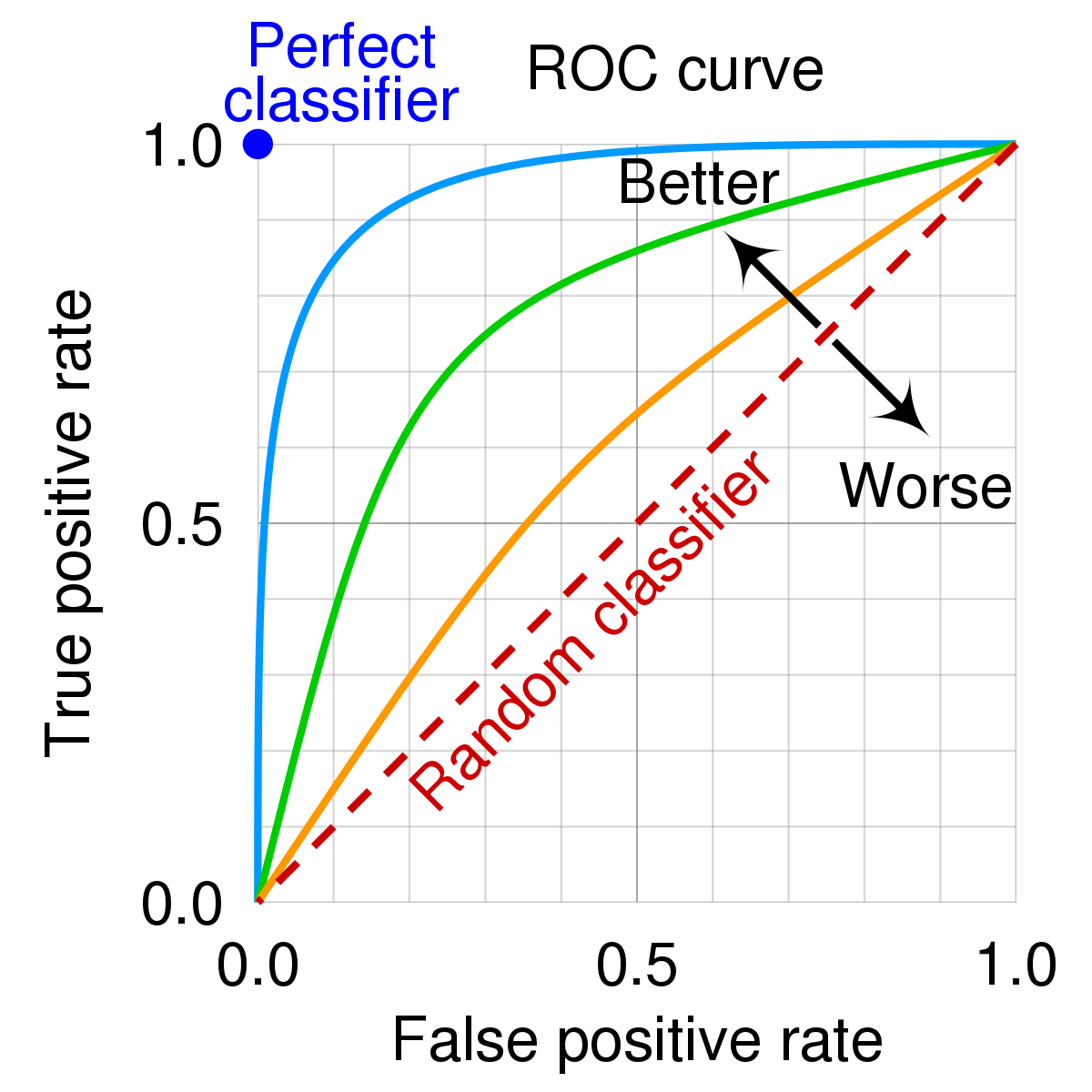

ROC Curve

The ROC curve, pictured below, sorts predictions in descending order of confidence and measures sensitivity, the true positive rate, over a confidence threshold. As threshold increases, we predict more “yes,” so sensitivity increases.

Info

The threshold can also be interpreted as

, or the false positive rate.

AUC

The stronger the curve, the better the performance. Thus, area under curve (AUC) is another common metric for performance, varying between

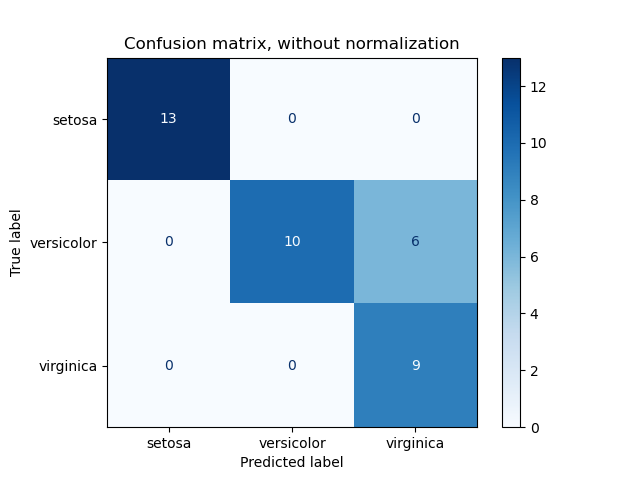

Confusion Matrix

Finally, a confusion matrix shows counts of actual vs predicted class values.