The Markov property states that the future only depends on the present. Markov chains use this property to model dynamic systems that transition between states; the next state depends only on the current state.

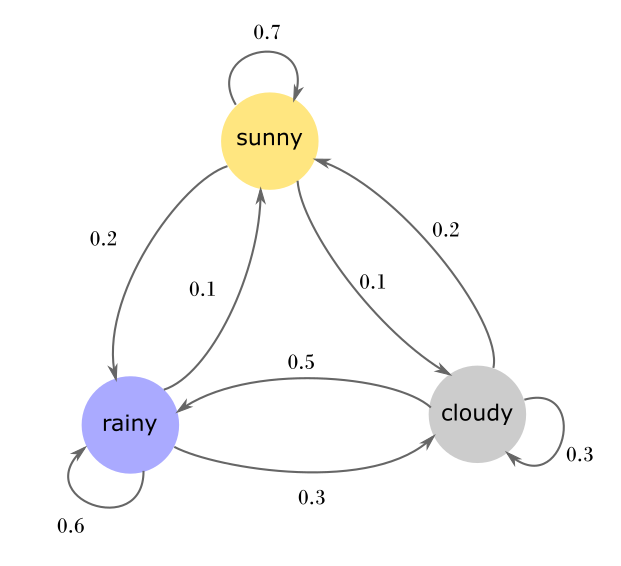

A chain consists of a set of states, probabilities of initializing at each state, and a matrix of transition probabilities across states. The following is a graphical example.

Formally, the chain consists of the state space

using the transition operator. If we let

describes how the probability distribution of states changes over time via our transitions.