Problem

Given a convex objective

Convexity

A convex set is defined as a set where for all

Intuitively, this is saying that the line between

A convex function is defined as

In other words, the function is upwards curving. This is also known as 🌈 Jensen’s Inequality.

Moreover, if

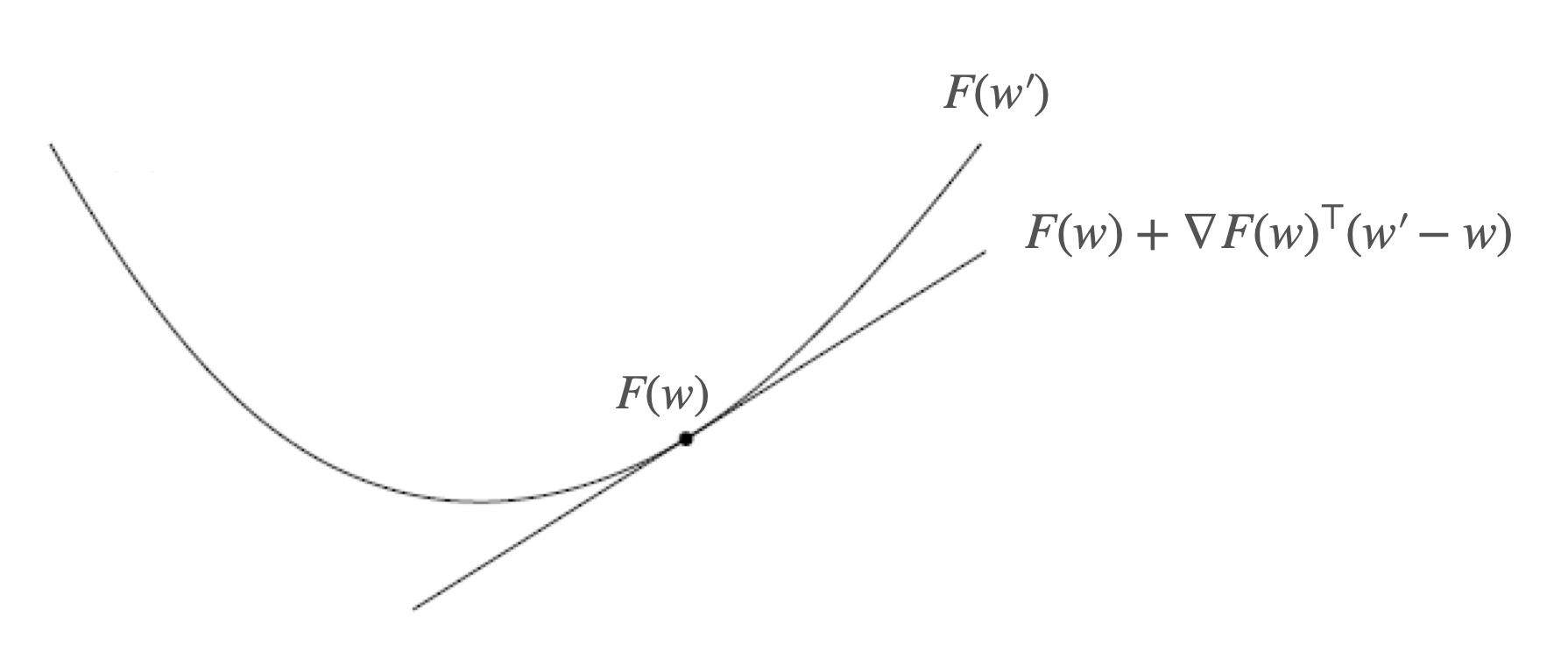

This is illustrated below.

Lastly, for all

Solution

If

Convex Properties

A convex function

A function

Gradient Descent

These two properties essentially bound our function with

- Initialize

. - For

time steps, - Update

.

- Update

For a

which gives us

With this update rule setting

Also, if we let our acceptable error

Proof of Convergence

We’ll start with the smoothness property,

By our gradient descent step, we know

and simplifying, we have

Next, we use the convex property to get

and rearranging and plugging in the gradient update above,

Then, since

we let

Adding the inequality we derived above, we get

Finally, summing

where we also use the property that

Moreover, if we also assume strong convexity with

and convergence rate