Theory

GRUs tackle the vanishing gradient problem in 💬 Recurrent Neural Networks by selecting which parts of the hidden state to modify; its idea is similar to 🎥 Long Short-Term Memory, but it maintains only the hidden state and no long-term cell state.

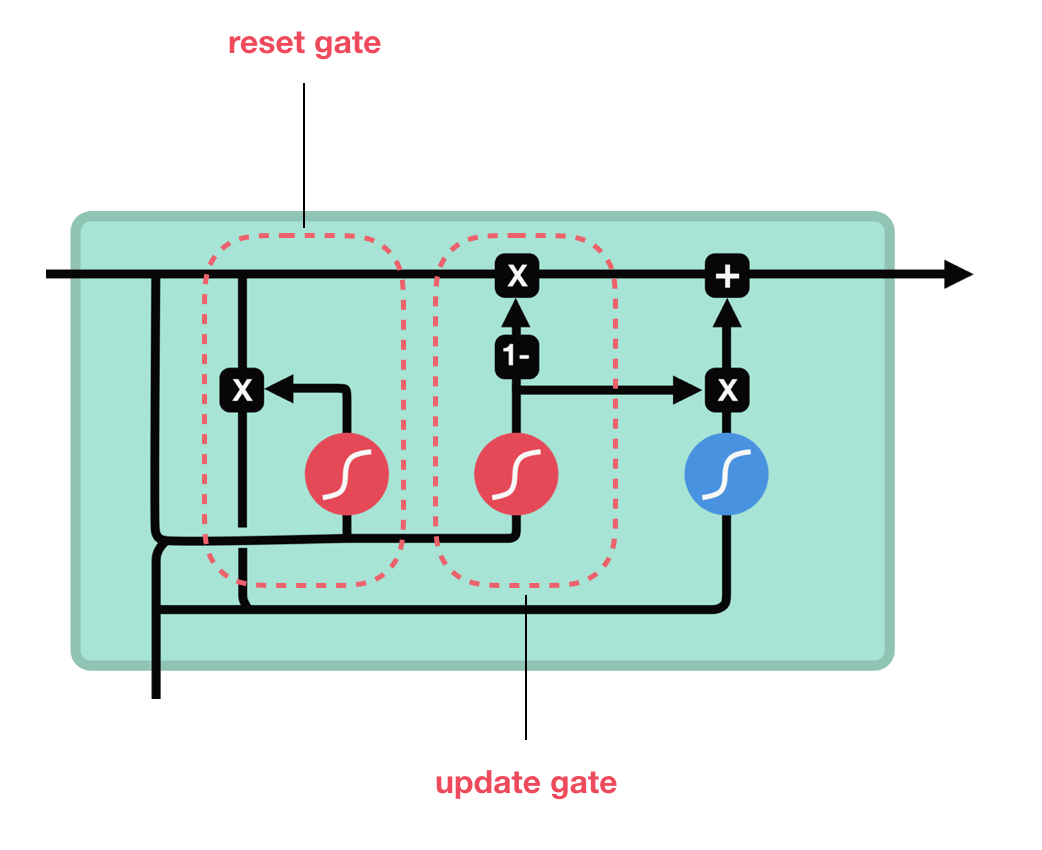

GRUs modify the hidden state using two gates.

- Reset gate uses

and to choose parts of to zero out. - Update gate uses

and to select how much of the past information from to keep.

Model

The model structure is depicted below.

Note that sigmoids (in red) are used for selection since they’re bounded from

Note that sigmoids (in red) are used for selection since they’re bounded from