Theory

Generalized Linear Models generalize linear regression to non-linear data by either transforming the output

- With link function

, our prediction . This link is derived by associating with a certain exponential family distribution, chosen depending on the type of output we expect. - With basis functions

, our prediction where . This usually works well with gaussian basis functions instead of polynomial functions.

Exponential Family

The exponential family of distributions is defined by the form

where

Distributions that fall into this family include the following.

- Bernoulli:

where , , , and . - Gaussian (for simplicity, let

): where , , , and . - Multinomial, Poisson, Gamma, Exponential, Beta, and Dirichlet.

Another way we can view the link function is that it predicts the expected value of the distribution we select, with

Radial Basis Functions

Radial basis functions are especially powerful and common. They use

By projecting our data onto the multiple RBFs, we can perform changes to our data.

- If

, we essentially perform dimensionality reduction. Conversely, with , we increase dimensionality. - With

, we switch to dual representation that relies on pairwise relationships between our datapoints.

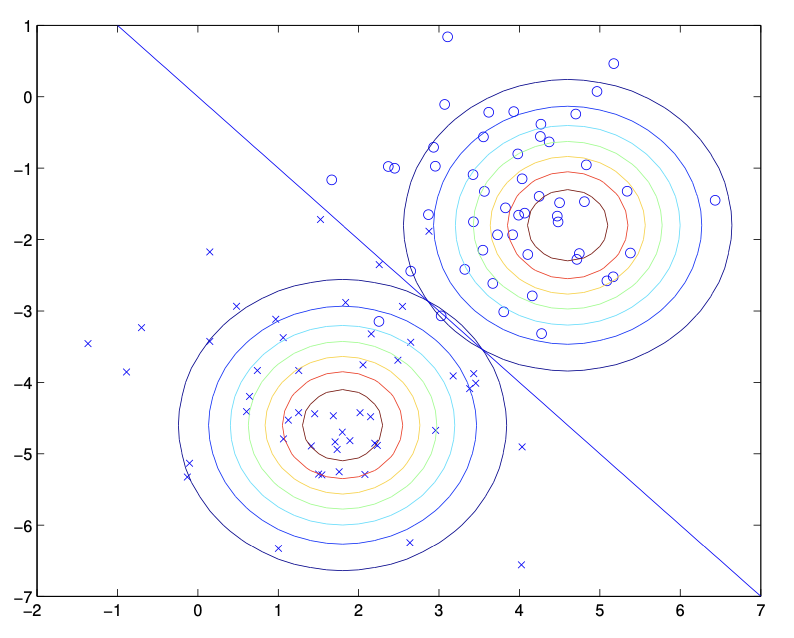

The following is an example of two gaussian kernels for binary classification. (This is technically a Gaussian Discriminant Analysis model, but the idea is similar.)

Using GLMs, we use the same idea of applying weights to features but get more versatile results. Specifically, a GLM is a model that fits any

Model

Our model’s the same as linear regression with the link or basis function additions. We still optimize the weights