StyleGAN improves on the generator design of a standard 🖼️ Generative Adversarial Network by incorporating ideas from style-transfer. Specifically, we introduce an intermediate latent space

To create this intermediate space, we use two models (trained end-to-end): the mapping network

Notably the synthesis network doesn’t take

We also inject noise into the synthesis, each scaled by a learned factor

Disentanglement

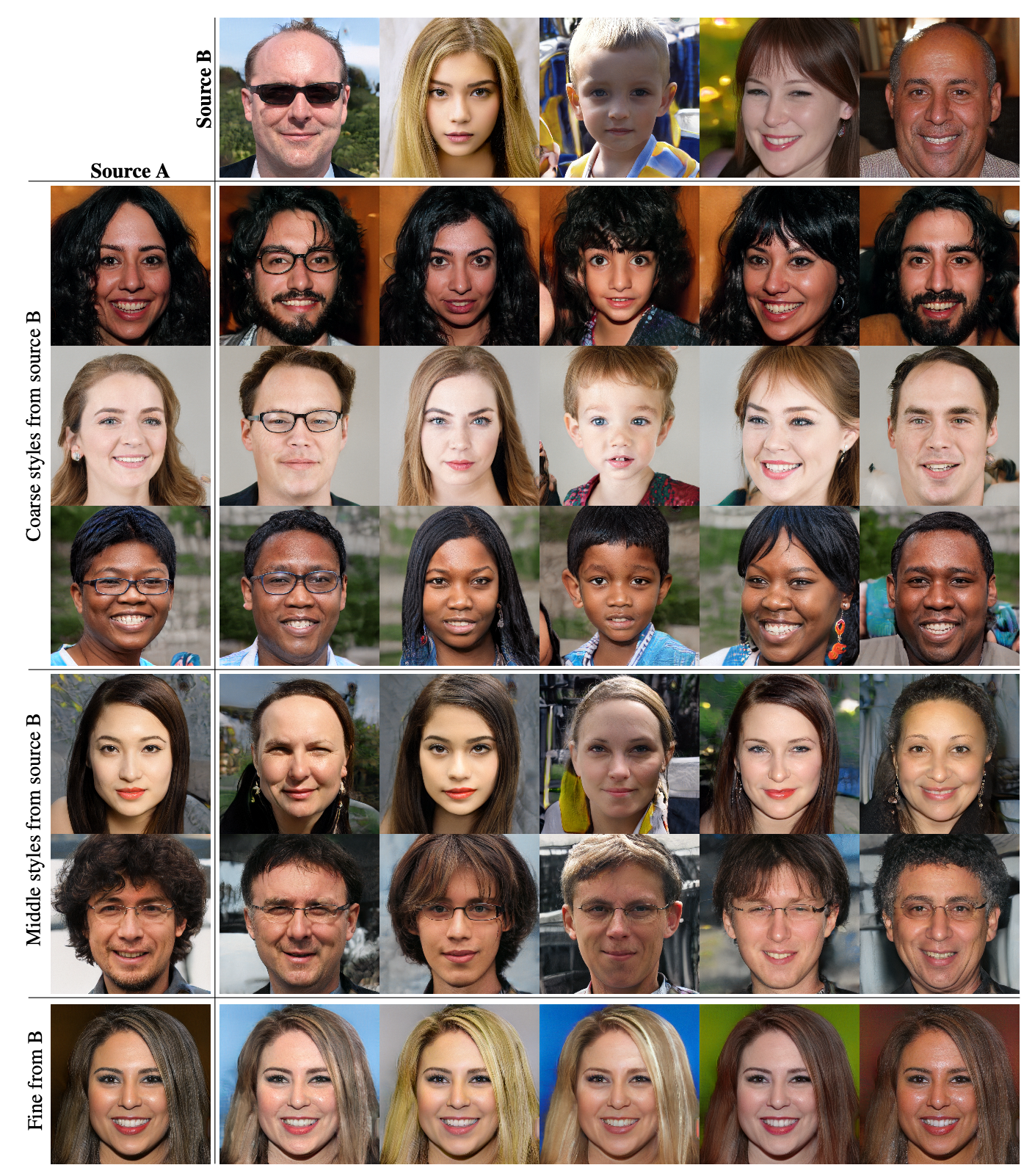

The core contribution of this architecture is successful disentanglement across stochastic features (via noise injections) and style (via style injections). Moreover, this network also disentangles different scales of styles—since AdaIN normalizes its input before applying the style, previous applied styles aren’t considered in later injections.

For example, in the picture above, we can take latent codes that generated source A and source B, then mix the styles together. Coarse, middle, and fine styles (injected from early to later in the network) capture a different range of scales from the head position and age to the hair color.

Another key disentanglement is between different style dimensions—age and gender, for example. The authors hypothesize that if our data distribution isn’t uniform—missing some combination of dimensions—our mapping from the latent code

If we use an intermediate