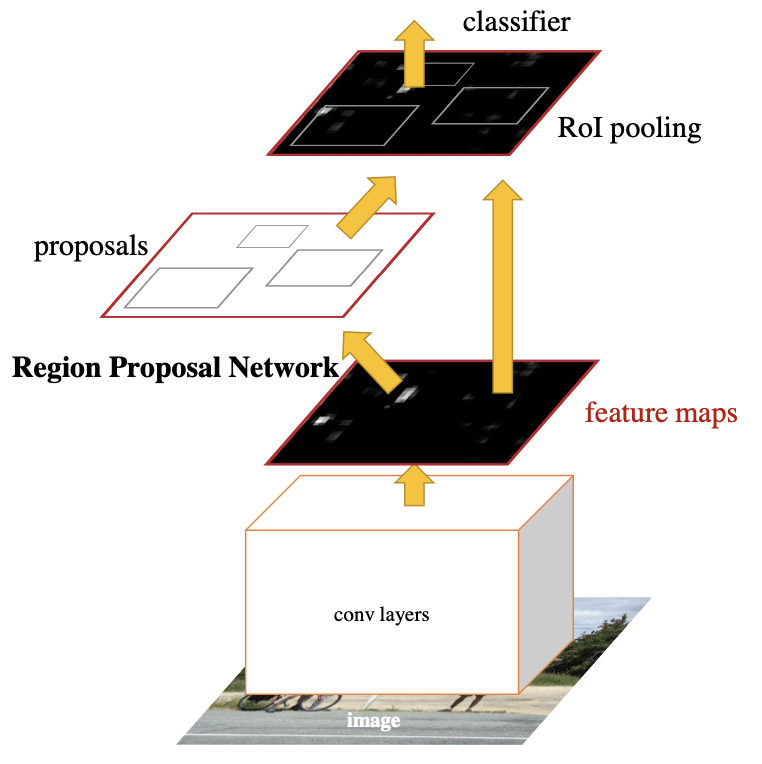

Faster R-CNN is a two-stage object detection network that builds on ideas from R-CNN and Fast R-CNN. Following the traditional object detection pipeline, we have a region proposal stage and a classification stage; the key innovation in Faster R-CNN is that both stages, trained as 👁️ Convolutional Neural Networks, operate on a feature map rather than the original image.

We first use a feature network (such as VGG-16) to convert the image into a feature map. We’ll use this feature map for both region proposal and classification.

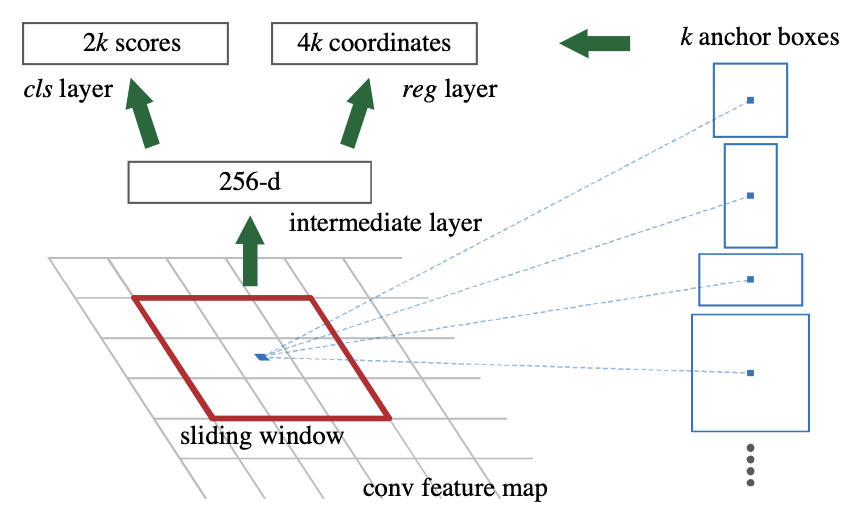

Region Proposal Network

The Region Proposal Network (RPN) predicts regions of interest (RoI) from the feature map. To do so, we slide a window across the feature map and feed the window into a CNN; this CNN predicts

- Coordinates

represent the location (center coordinate) and scale of the bounding box. - Objectness scores estimate the probability of an object or no object.

Anchor Boxes

Anchor boxes are boxes of varying scales and aspect ratios that allow the RPN to specialize each of its coordinate and objectness predictions to different objects. In Faster R-CNN, we use 3 scales and 3 aspect ratios; during training, ground truth boxes are (typically) assigned to the anchor boxes that most resemble them, thus encouraging specialization.

Training

Specifically, we assign an anchor to have an object (positive) if it has the highest IoU with a ground truth box or an IoU

To train the RPN, we optimize objectness loss and coordinate loss,

where

where

where in our case

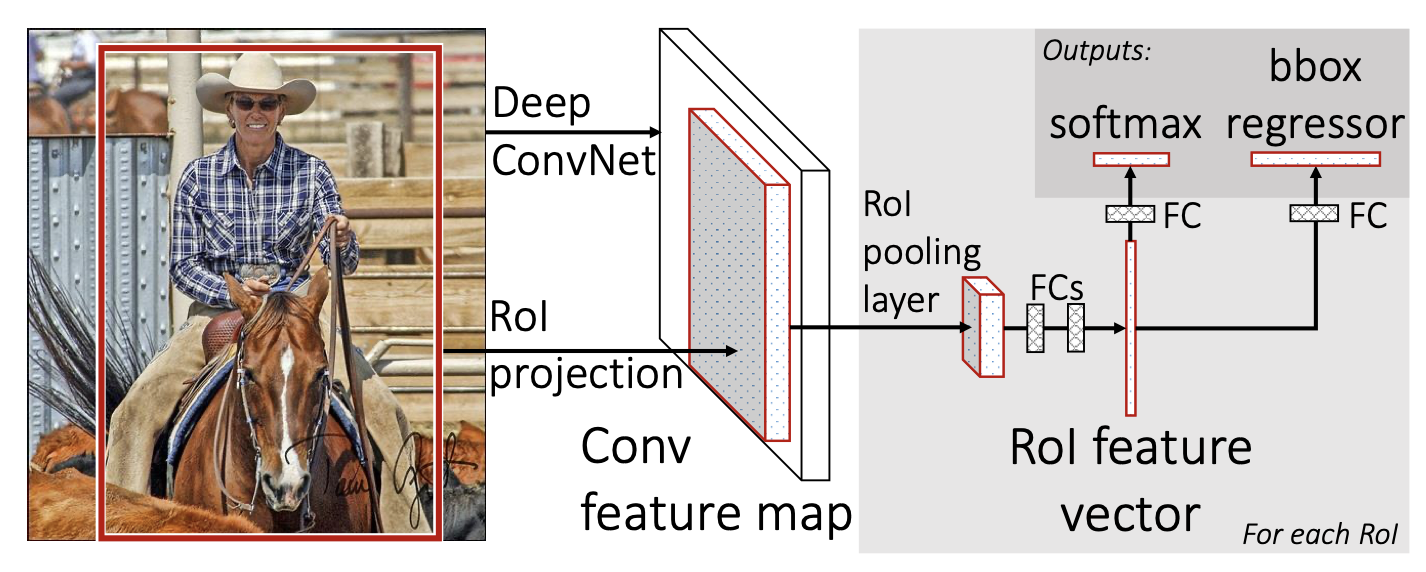

Detection Network (Fast R-CNN)

The detection network classifies proposed RoIs using Fast R-CNN. We take in the first feature map and RoIs and for each RoI projection on the feature map, we apply RoI pooling to create another fixed-size feature map; RoI pooling essentially performs max pooling but with a fixed output resolution. That is, given a feature projection of size

This second feature map goes through some fully-connected layers and gives us the softmax class probabilities

Our loss consists of classification and regression terms,

where